Currently, the answer is a resounding, “What? No… why would you even ask?” But this article isn’t about current AIs. True artificial intelligence is a long, long way off; and frankly, if we run face-first into the singularity, I doubt that our digital progeny will be interested in building interfaces for us.

Currently, the answer is a resounding, “What? No… why would you even ask?” But this article isn’t about current AIs. True artificial intelligence is a long, long way off; and frankly, if we run face-first into the singularity, I doubt that our digital progeny will be interested in building interfaces for us.

Learning algorithms are getting a lot smarter, and the number of things we can do with them is rapidly expanding. Current implementations have massive flaws: facial recognition produces an uncomfortable number of false positives; AI-based recruiting means that resumes have to be built around keywords; YouTube’s algorithm is a dumpster fire. But the overall trend is toward smarter and smarter AI.

But can that AI ever design for regular people? Let’s get speculative, baby!

The Big Problem for AI Designers

I mean, can regular people even truly design things for other people? Well yes, but we collectively get it wrong about as often as we get it right. If we can instill in an AI a very cynical view of our human cognitive abilities, then we can probably teach them to design for us.

If we can instill in an AI a very cynical view of our human cognitive abilities, then we can probably teach them to design for us

It’s not because “people are dummies, hurr hurr”, although that’s a part of the issue. Mostly, though, our cognitive and reasoning skills just get impaired all the time, by dozens of different things. The human brain is a marvel in pretty much every sense of the word. We don’t currently have computers with anything like the brain’s capacity for adaptation, and few server warehouses that even approach the brain’s estimated.. well… capacity (as in storage) at least not according to the higher estimates.

The problem with our brains is that they’re being used by every part of our body, all the time. Then bits of them are constantly dying off and being reborn. And then the rest is being used to worry about things you did ten years ago, so you don’t have to worry so much about what’s going on right now.

Compared to computers, even people who don’t have ADHD are easily distracted.

AI, on the other hand, is focused like a laser. You tell it to look for keywords, and it finds them. You tell it to look for x, y, and z, and adapt itself based on what it finds, and it will do that. That’s one reason why Microsoft’s AI, Tay, got real racist, real fast, when exposed to Twitter.

Set an AI to find the “Buy” button on a web page, and so long as it has somewhat flexible criteria of what constitutes a “Buy” button, it will probably find that button quickly. Set the world’s smartest human to perform the same task, and they just might get lost on the way because they don’t navigate through raw code, keywords in hand, like a bot does. They might get distracted by a shiny ad, a knock at the door, or the existential dread that they usually manage to ignore.

Bringing real people to the point of sale, or at least to the point of your website, is a bit like herding cats, even at the best of times. Teaching an AI to provide multiple paths to multiple objectives, which objectives to prioritize, and how to do it without alienating the human users is a complex endeavor to say the least. It would literally have to be programmed to account for the fact that, for the species that invented computers, sometimes we’re not all that bright, or logical, or efficient, or focused, or driven… you get the picture.

Even the best of us have many moments of intellectual prowess that can be most charitably described as “interesting”. Uncharitable descriptions of those moments may contain lots of four-letter words.

The Project Specs

So we have to teach an AI all of the things that humans have been doing for millennia: We have to teach them about what humans consider beautiful, and how to adapt to those constantly-changing aesthetic standards. We have to teach them to be efficient, but not so efficient that it makes people uncomfortable. We have to teach them to account for our distractibility, our sense of whimsy, and every other factor that we can possibly imagine.

even the most accomplished anthropologists would never claim to understand the whole human experience

Someone even posited that we might have to train them to “think dumber” in order to design for us. I personally think it’s going to be a lot more complex than that. We’re going to have to train AIs in one discipline that we’ve had trouble teaching to people: empathy.

Designer AIs of the future—if we want them to be as good as or better than us—aren’t just going to have to factor in all the potential issues we can think of; we’ll have to teach them to recognize new and unfamiliar human circumstances (and sometimes new and unfamiliar human failings) in order to adapt and design a workaround. We can’t possibly train them in the worldwide human experience. For one, that experience keeps changing, and two, even the most accomplished anthropologists would never claim to understand the whole human experience.

Some of the more arrogant programmers might make that claim, but we’ll just keep them locked up in Silicon Valley where they belong.

Conclusion

Given the complexity of the task, I’d argue that we either have to be content with template-based AI-made designs that never get better than “decent”. Or, we have to invent a full-on artificial consciousness, and hope that we have something they want that we can provide in return for the best UIs this world has ever seen.

But honestly, that almost seems like a waste of a good killbot.

https://www.webdesignerdepot.com/wp-content/plugins/wp-fs-polls/widget/poll.js.php

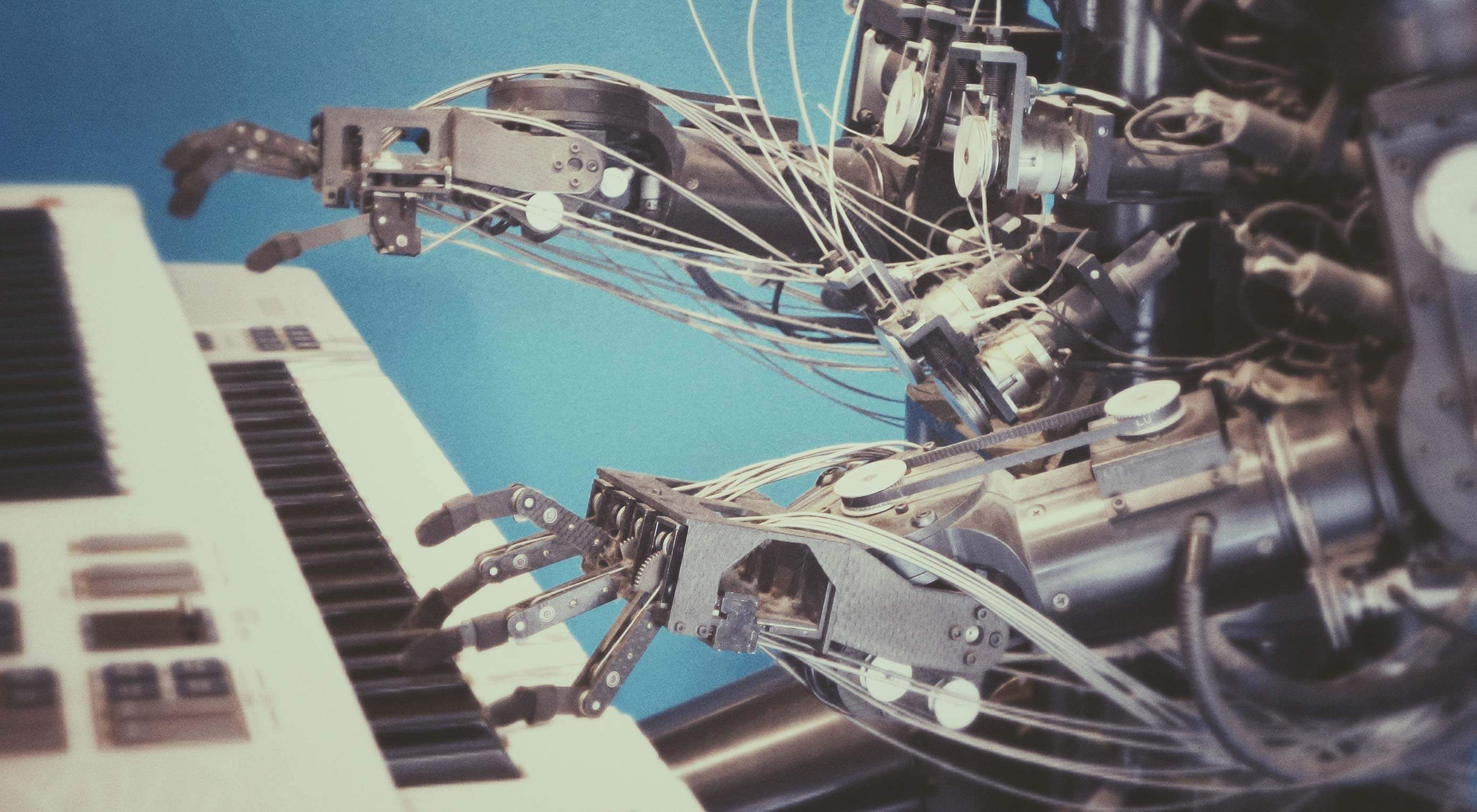

Featured image via Unsplash.

p img {display:inline-block; margin-right:10px;}

.alignleft {float:left;}

p.showcase {clear:both;}

body#browserfriendly p, body#podcast p, div#emailbody p{margin:0;}

from Webdesigner Depot https://ift.tt/32124fV

from WordPress https://ift.tt/2AT4KjQ

No comments:

Post a Comment