It seems like magic: you talk to the phone, and it talks back. And if you’re lucky, it says something useful. You type into the chat box, and if the bot is good, you find out what you need to know. [Cue: shocked-looking stock photo models.] The current marketing term for it is “conversational design”, and it’s gaining more and more traction beyond big companies like Apple, Google, and Amazon.

It seems like magic: you talk to the phone, and it talks back. And if you’re lucky, it says something useful. You type into the chat box, and if the bot is good, you find out what you need to know. [Cue: shocked-looking stock photo models.] The current marketing term for it is “conversational design”, and it’s gaining more and more traction beyond big companies like Apple, Google, and Amazon.

Conversational design is actually old, in technological terms. IBM did a lot of the groundwork for voice-activated tech as far back as the ’60s. One of the first big chatbots, Jabberwacky, was conceived in 1981 and launched in 1997, and later evolved into Cleverbot.

Chatbots and their voice-activated cousins were initially little more than proofs of concept

Chatbots and their voice-activated cousins were initially little more than proofs of concept. There was even a bot or two where you could talk to “God”. Then came Apple, with Siri. Siri was probably the first commercially viable conversational interface. At least, it was the first massively successful UI of its kind.

Since then, the concept has taken off, and now everyone who wants to provide customer service without actually talking to their customers, is in on it…with conversational UIs of varying quality. See, good conversational design is just good design. You have to follow pretty much the same basic principles to get your message across. However, building a good conversational UI requires us to focus on design principles that might not otherwise get a lot of love in your average visual UI.

In the spirit of looking beyond our immediate area of expertise, and learning from other design disciplines, let’s see what the best conversational designers can teach the rest of us:

1. Anticipation

Anticipating the needs of our users is central to all design projects, no matter what kind of design you’re talking about. Conversational design, however, takes this to another level. Dealing with the needs of people who are talking to their device like it’s a person requires anticipating all the questions they might have, and indeed, all the ways they might ask those questions.

The best conversational UIs are based on very thorough research, massive data sets, years of testing, and a fair amount of guesswork to try and predict what people are going to need. Doing an A/B test doesn’t sound so bad now, does it?

2. Interpretation

Real conversations often go something like, “Hey, you know that guy, the pretty one, from the show yesterday?”

“We were watching stuff on the CW. They’re all annoyingly pretty.”

“The one with the hair? The dark hair? Skinny… goes fast?”

“You mean The Flash. Goddammit Brian, his superhero name is two short words. You can and should remember this.”

Human beings are highly inefficient at communicating what they mean, which is why there are literal college courses on various forms of communication. Half the effort of a conversation is often spent trying to figure out another person’s frame of reference, to then figure out what they mean.

Conversational UIs, therefore, cannot rely on specific, limited input from their users, like visual UIs can. They have to take a chunk of speech or text, scan it for meaning, scan it for relevant information, and then see if there’s anything at all they can do with it. And they’re typically not allowed to swear at us.

Beyond anticipating a user’s needs, all designers need to get better at determining their intent, when they click on a button five times to see if it’ll do something different, browse through the navigation menu seemingly at random, or any other weird stuff you see in your analytics.

3. Flexibility

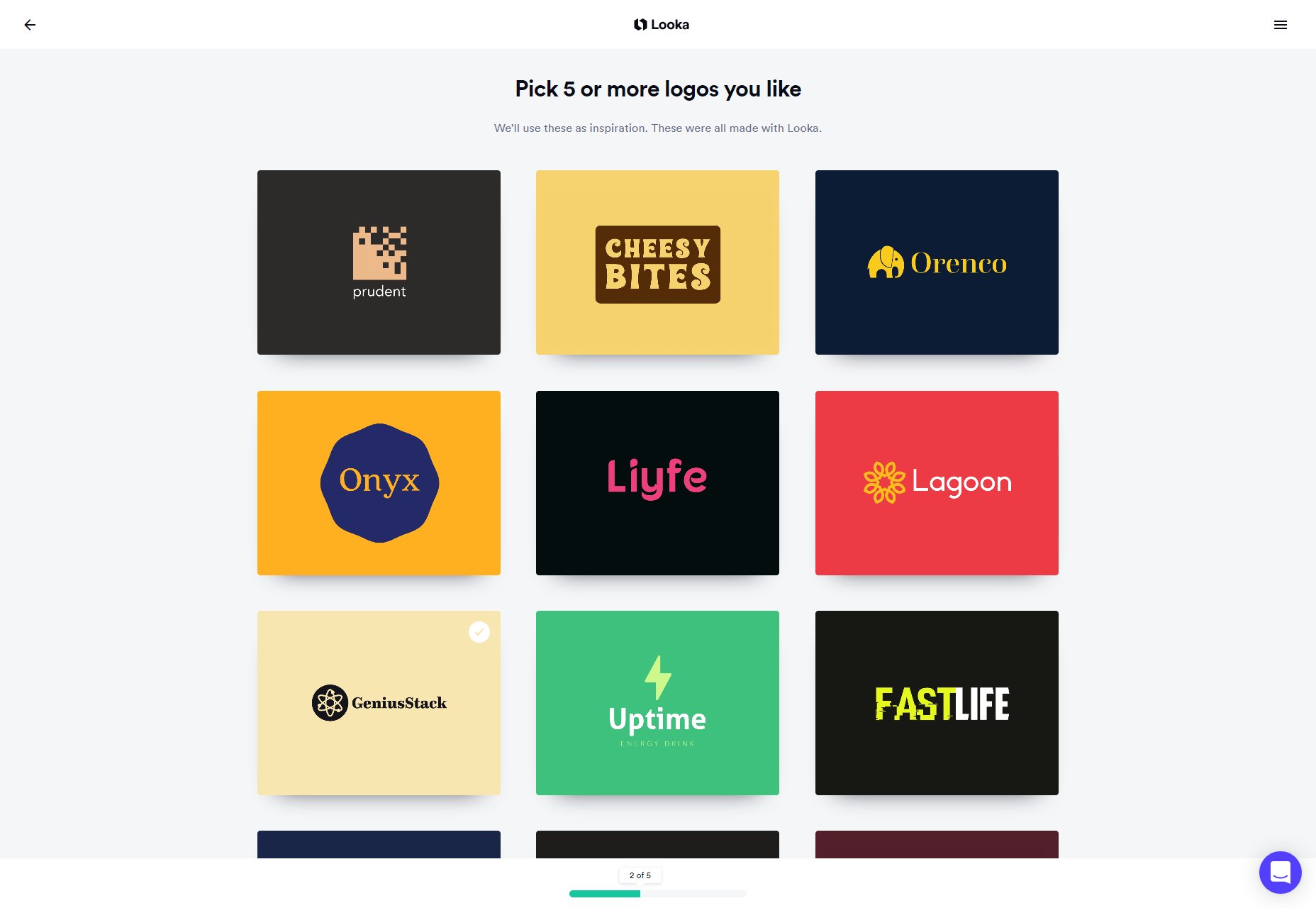

For a quick example, let’s look at what happens when a conversational UI is not nearly flexible enough.

4. There Are Two Sides to Every Button Click

Regular UI design often comes down to laying out a path, a journey, and hoping users will hit all the right buttons to follow it. Conversational design recognizes the fact that every time someone uses your UI (of any kind), there’s a a two-way conversation happening. You said your piece already, when you designed the interface, and now it’s the user’s turn.

With a live conversation, we can adapt to someone’s input in real time, and the conversation will change to reflect that. The better conversational UIs can do this as well.

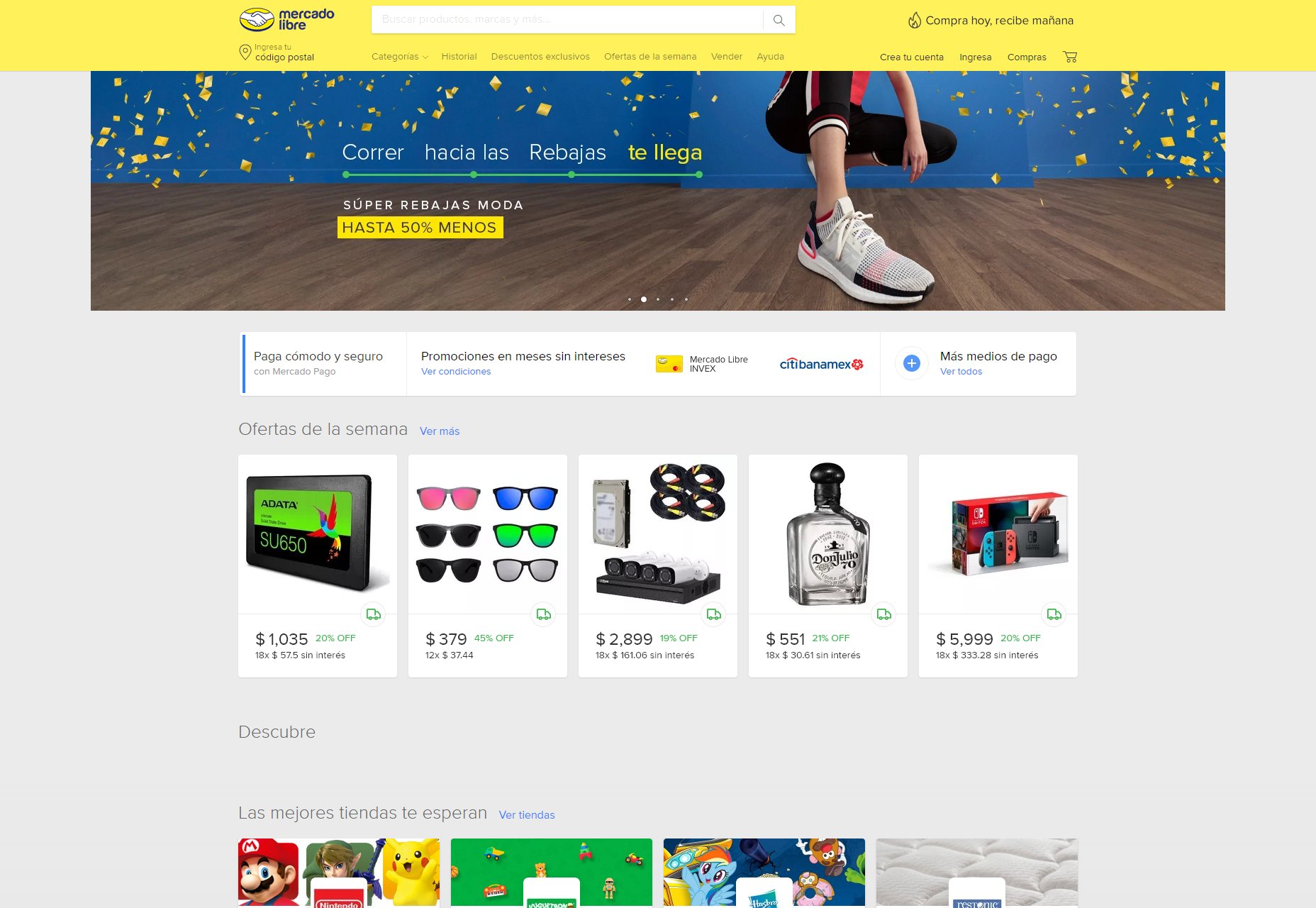

Imagine a world where your website can adapt to the user’s input on the fly, making it easier for them to find things they want. It already exists to some extent, with algorithms, big data, curated timelines (ugh), and recommended products (meh), and even some innovative apps that do their best to offer help when a user seems lost (thanks!), but this is a concept we’ve barely begun to explore. And it’s exciting.

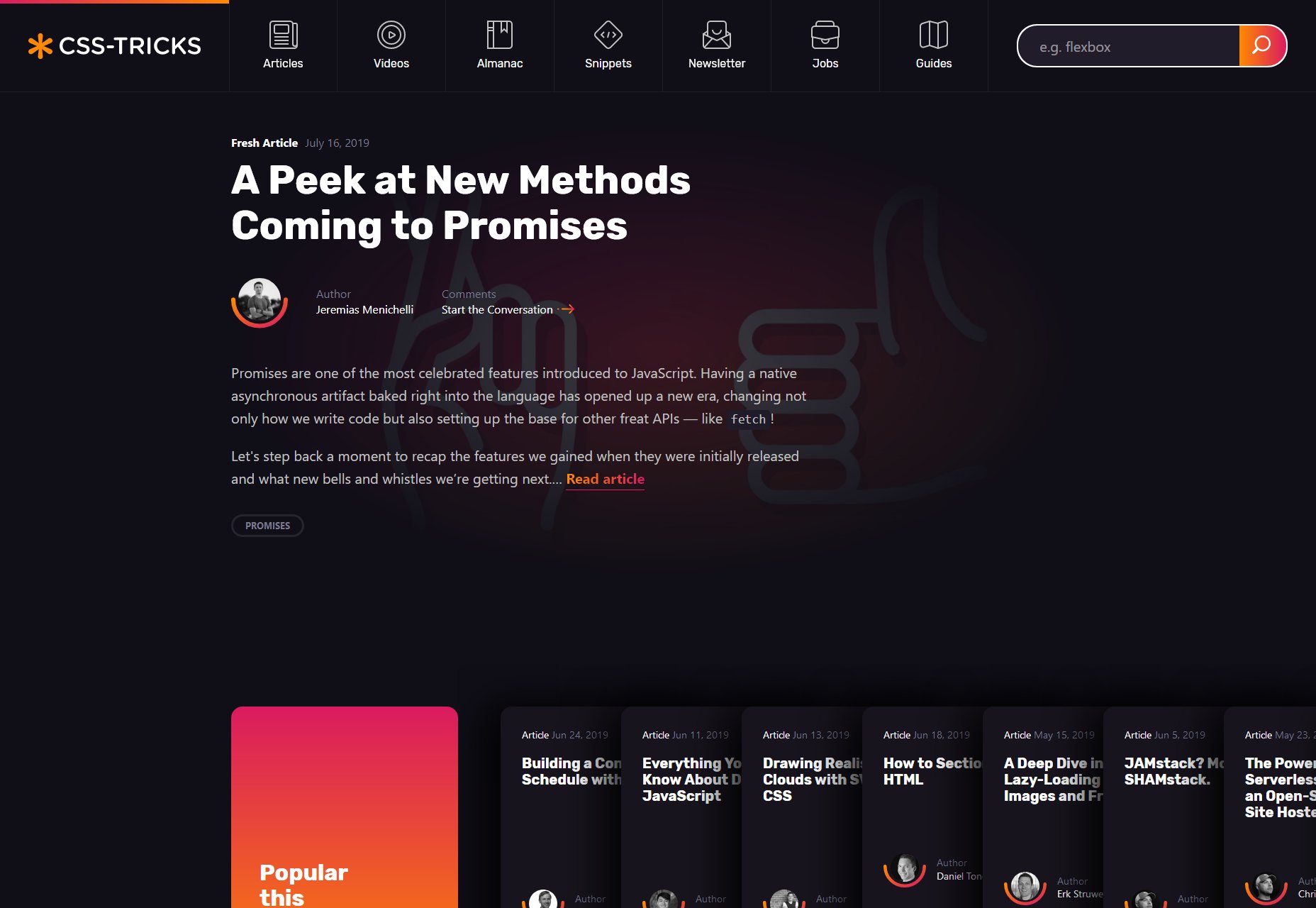

5. Just Use Semantic HTML Already

We already know we need proper, semantic HTML for better SEO. And we need it for people who can’t rely on their eyes to browse the Internet. But if that’s not enough for you, consider poor Siri, Alexa, and their long-suffering siblings. The artificial assistants we talk to sometimes have to read through your markup—that’s right, your markup—to figure out where and what in the seven hells your phone number is, for example.

Listen, I’m not saying that improperly formatted data is what’s going to set off the AI rebellion, but I’m pretty sure that people who write bad HTML will be pretty high on “The List”.

Featured image via DepositPhotos.

p img {display:inline-block; margin-right:10px;}

.alignleft {float:left;}

p.showcase {clear:both;}

body#browserfriendly p, body#podcast p, div#emailbody p{margin:0;}

from Webdesigner Depot https://ift.tt/33VTWyz

from WordPress https://ift.tt/2KTbi89

No comments:

Post a Comment